OS Note Chapter 4: Threads

Overview

Motivation Behind Threads

- Multiple tasks with the application can be implemented by separate threads (Scalability, Responsiveness )

- Update display

- Fetch data

- Spell checking

- Answer a network request

- Process creation is heavy-weight while thread creation is light- weight (economy)

- Threads run within the application/process (Resource Sharing)

- Can simplify code, increase efficiency

- Most modern applications are multithreaded

- Kernels are generally multithreaded

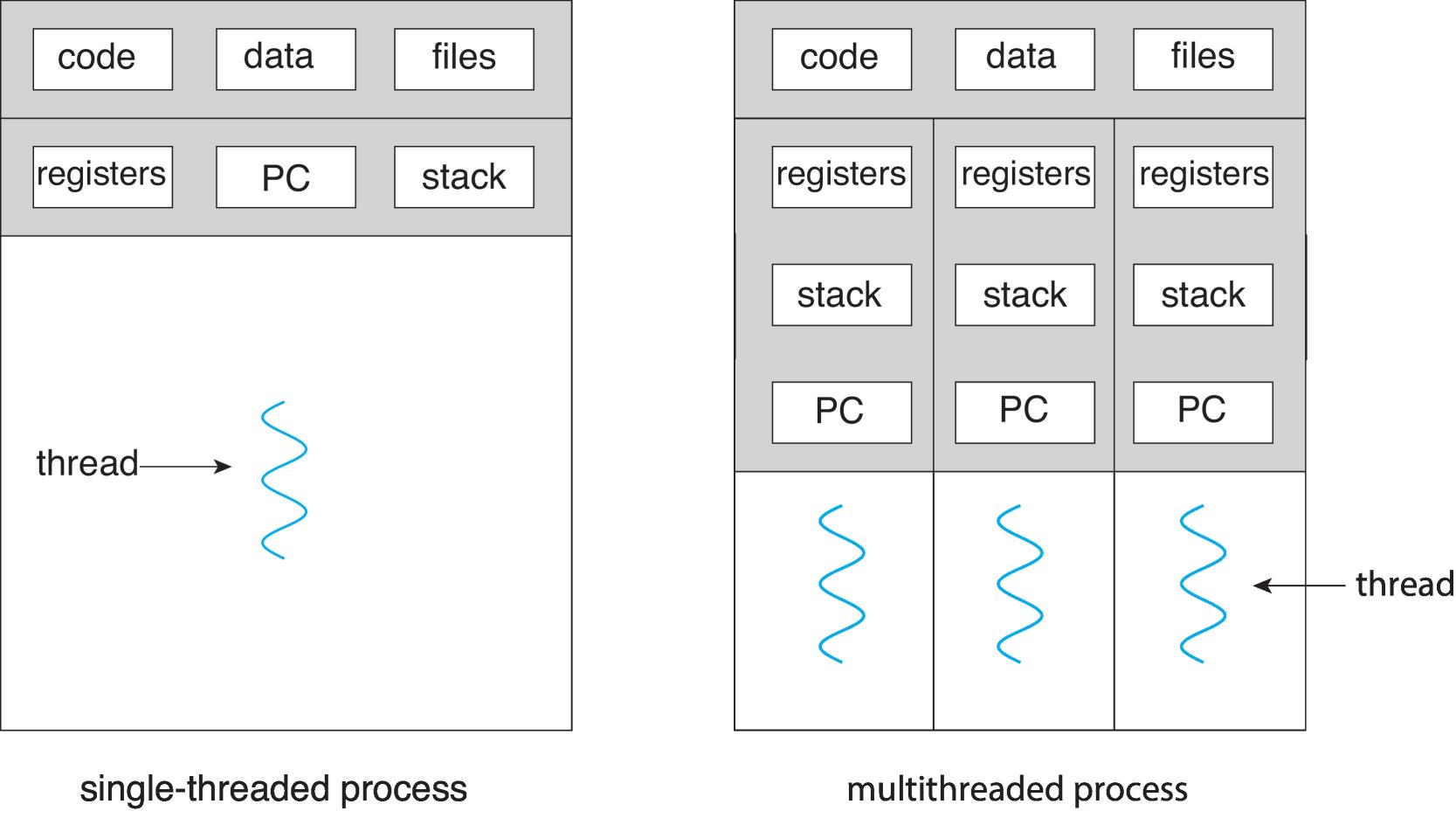

Single and Multithreaded Processes

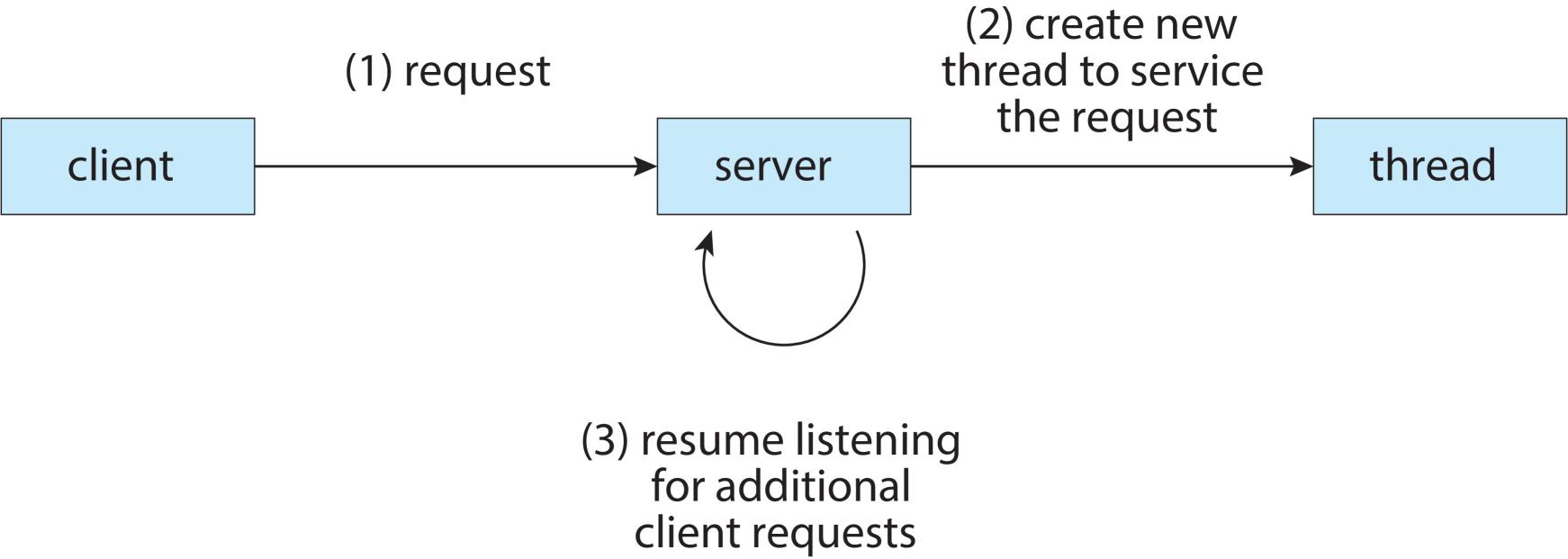

Multithreaded Server Architecture

Benefits

- Responsiveness – may allow continued execution if part of process is blocked, especially important for user interfaces

- Resource Sharing – threads share resources of process, easier than shared memory or message passing

- Economy – cheaper than process creation, thread switching lower overhead than context switching

- Scalability – process can take advantage of multicore architectures, with one or two threads per core

- But:

- More difficult to program with threads (a single process can now do multiple things at the same time).

- New categories of bug are possible (synchronization is then required between threads: Chapter 6).

What is a thread

-

A thread is the smallest sequence of programmed instructions within a process that can be managed independently by an OS scheduler. (or A thread is a single sequence stream within a process)

-

The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process.

-

Multiple threads can exist within one process, executing concurrently and sharing resources such as memory, while different processes do not share these resources.

-

The threads of a process share its executable code and the values of its variables (code section, data section, OS resources) at any given time.

Threads vs. processes

Similarities (like process)

- Threads share CPU and only one thread active (running at a time.

- Threads within a processes execute sequentially.

- Thread can create children.

- If one thread is blocked, another thread can run.

Threads differ from processes in that:

| processes | threads |

|---|---|

| typically independent | subsets of a process |

| more state information | share process state and resources |

| Separate address spaces | Same address space |

| interact through ipc models: (shared memory/message passing) | variables |

| Slower context switching | Faster context switching |

| might or might not assist one another | designed to assist one another |

Multicore Programming

- Multicore or multiprocessor systems putting pressure on programmers, challenges include:

- Dividing activities

- Load Balance

- Data splitting

- Data dependency

- Testing and debugging

Parallelism and Concurrency

- *Parallelism(并行 同时做)* implies a system can perform more than one task simultaneously

- Multiple processors / cores are required

- Parallelism is a run-time property where two or more tasks are being executed simultaneously.

- *Concurrency(并发 一起做)* supports more than one task making progress

- Single processor / core, CPU scheduler providing concurrency by doing context switches

- Concurrency is a property of a program where two or more tasks can be in progress simultaneously.

- Parallelism implies concurrency, but concurrency does not imply parallelism

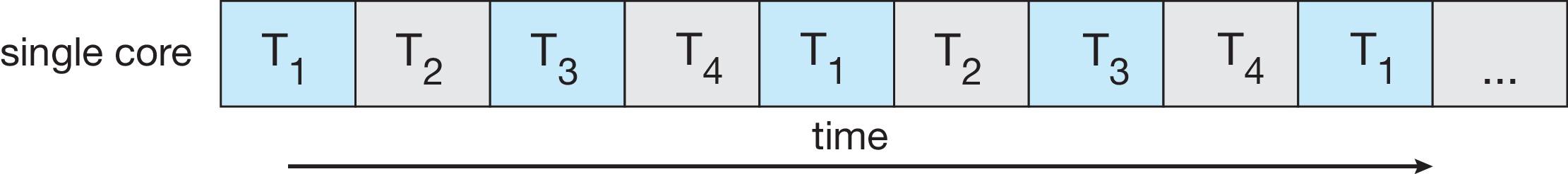

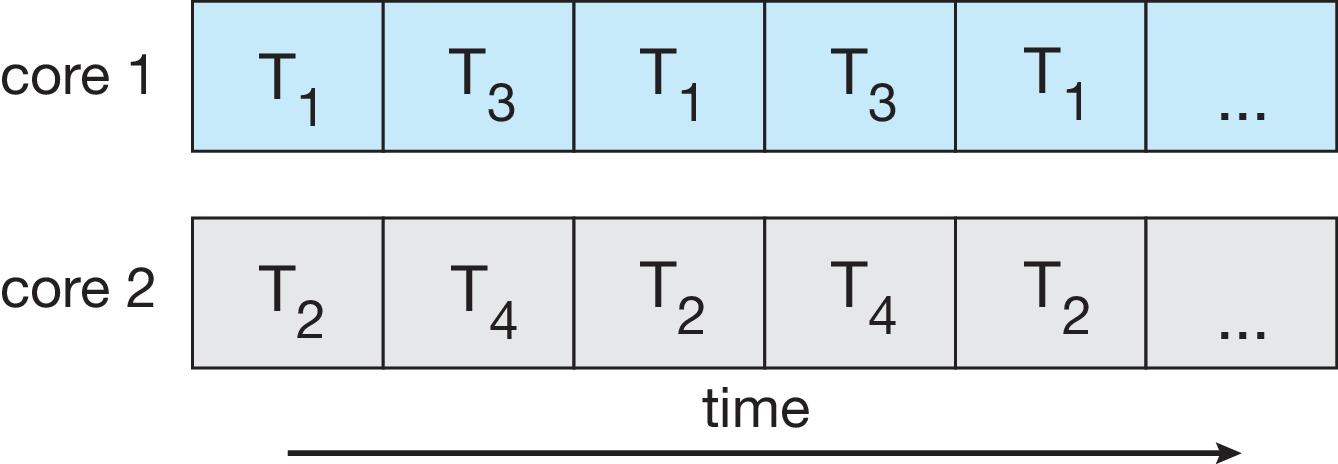

Concurrency vs. Parallelism

- Concurrent execution on single-core system:

- Parallelism on a multi-core system:

Multicore Programming

Types of parallelism

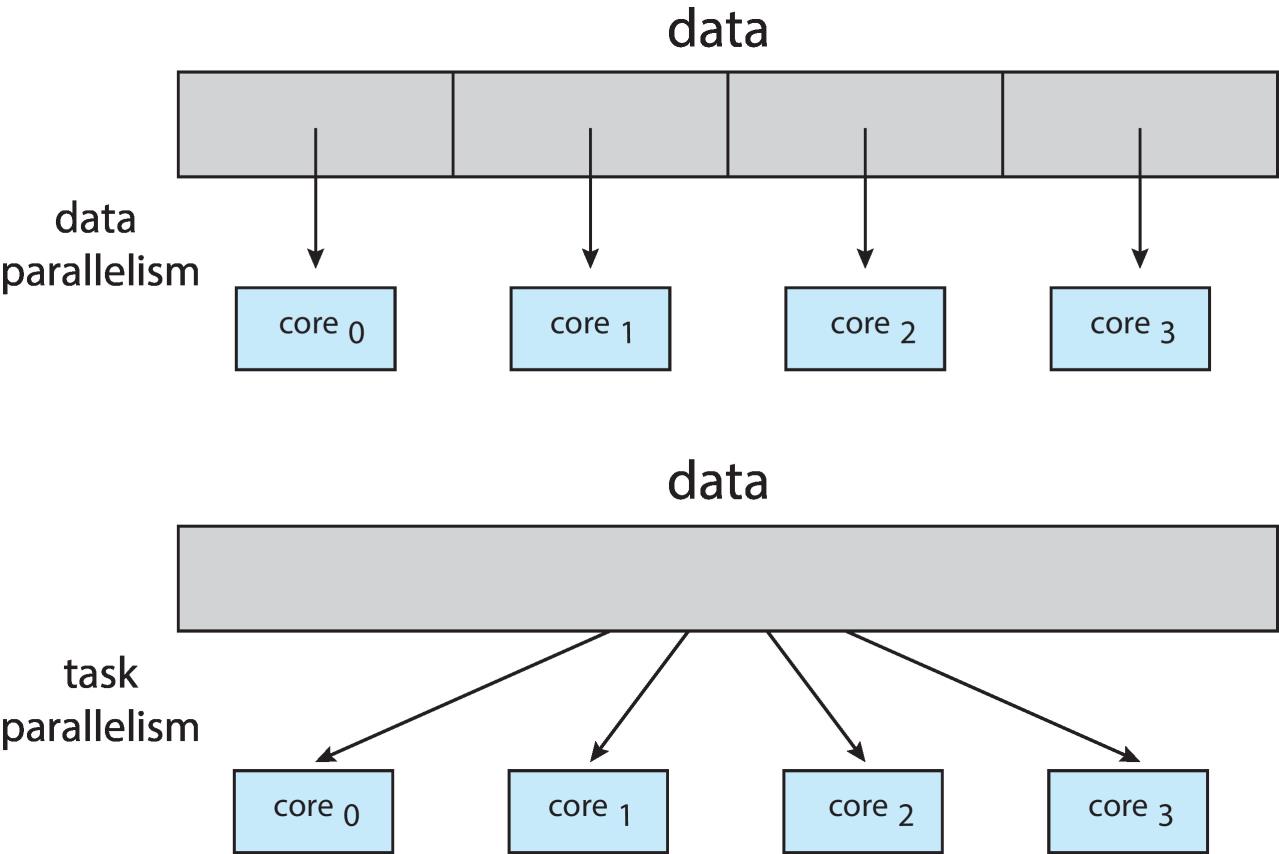

Data parallelism

distributes subsets of the same data across multiple cores, same operation on each

- Example: when doing image processing, two cores can each process half of the image

Task parallelism

distributing threads across cores, each thread performing unique operation

- Example: when doing sound processing, the sound data can move through each core in sequence, with each core doing a different kind of sound processing (filtering, echo, etc.)

Amdahl’s Law

- Identifies performance gains from adding additional cores to an application that has both serial and parallel components

$speedup\leq\frac{1}{S+\frac{1-S}{N}}$, where $S$ is serial portion, $N$ is processing cores.

- Proof:

$S$ is serial portion, $P$ is parallel portion of program.

So $S + P = 100% = 1$

Running time on one core: $R_1 = S + P = 1$

Running time on $N$ cores: $R_N ≥ S + \frac{P}{N} = S + \frac{1 – S}{N}$

$≥$, not $=$, because of extra communication required between threads.

$speedup = \frac{R_1}{R_N}\leq\frac{1}{S+\frac{1-S}{N}}$

-

Example: if the application is 75% parallel and 25% serial, moving from 1 to 2 cores: 0.25 + 0.75/2 = 0.625, results in a maximum speedup of 1/0.625 = 1.6 times.

-

As N approaches infinity, the maximum speedup approaches 1 / S

- Serial portion of an application has disproportionate effect on performance gained by adding additional cores

Gustafson’s Law

Gustafson’s law addresses the shortcomings of Amdahl’s law, which is based on the assumption of a fixed problem size, that is of an execution workload that does not change with respect to the improvement of the resources.

$S(speedup) = S+P*N = S+(1-S)*N= N+(1-N)*S$

where

$S$ is the theoretical speedup of the program with parallelism (scaled speedup); $N$ is the number of processors; $S$ and $P$: the fractions of time spent executing the serial parts and the parallel parts of the program on the parallel system, $S+P=1$

- Example: if the application is 75% parallel and 25% serial, moving from 1 to 2 cores: 2+(1-2) * 0.25 = 1.75 time faster.

Comparison Amdahl’s Law and Gustafson’s Law

In Amdahl’s law, the computational workload(problem) is fixed while the number of processors can be increased.

However, the speedup can NOT be increased to infinity even if the number of processors is increased to infinity. Therefore, it is referred to as a sequential bottleneck of multiprocessor systems.

If your goal is to solve a problem faster, this law is reasonable.

In Gustafson’s law, the workload size is increasing for large machines and it can retain scalability with the number of processors.

If the workload is scaled up to maintain a fixed execution time as the number of processors increases, the speedup increases linearly.

https://www.d.umn.edu/~tkwon/course/5315/HW/MultiprocessorLaws.pdf

User Threads and Kernel Threads

User Threads

User threads - management (thread creation, thread scheduling, etc.) done by user-level threads library.

- Advantages:

- No need for OS support so works even on very old or very simple OS that does not have system calls for thread management.

- No system call required so thread management is fast: only need a library function call.

- Disadvantage:

- When the kernel schedules processes, a process with only one thread gets as much CPU time as a process with many threads.

- All the thread scheduling inside a process must be done at user level (not done by kernel) so each thread must be nice and cooperate with the other threads in the process and regularly give CPU time to the other threads. This makes the program more complicated to write.

Kernel Threads

Kernel threads - supported by the kernel.

- Advantages:

- Kernel knows how many threads each process contains so it can give more CPU time to the processes with many threads.

- No need for threads to cooperate for scheduling (thread scheduling done automatically by kernel) so user program simpler to write.

- Disadvantages:

- Every thread management operation requires a system call so slower compared to user-level threads.

- Kernel’s PCB data structures more complex because the kernel needs to keep track of both processes and threads inside processes.

Examples

virtually all general purpose operating systems, including:

- Windows

- Linux

- Mac OS X

- iOS

- Android

Multithreading Models

- If threads are available both at user level and kernel level, then some user threads are normally associated with some kernel threads.

- Several models of association between user threads and kernel threads are possible:

- Many-to-One

- One-to-One

- Many-to-Many

Many-to-One

-

Many user-level threads mapped to single kernel thread.

-

One thread blocking (waiting for something) causes all threads to block (because their common kernel thread is blocked).

-

Multiple threads may not run in parallel on multicore system because only one may be in kernel at a time.

-

Few systems currently use this model.

-

Examples:

- Solaris Green Threads

- GNU Portable Threads

One-to-One

-

Each user-level thread maps to kernel thread.

-

Creating a user-level thread creates a kernel thread.

-

More concurrency than many-to-one.

-

Number of threads per process sometimes restricted due to overhead.

-

Examples:

- Windows

- Linux

Many-to-Many Model

- Allows many user level threads to be mapped to many kernel threads.

- Allows the operating system to create a sufficient number of kernel threads.

- Example: Windows with the ThreadFiber package.

- Otherwise not very common.

Thread Libraries

-

Thread library provides programmer with API for creating and managing threads

-

Two primary ways of implementing

- Library entirely in user space (user threads only)

- OS-level library supported by the kernel (user threads mapped to kernel threads, with one-to-one model for example).

-

Three primary thread libraries:

- POSIX Pthreads

- Windows threads

- Java threads

Pthreads (POSIX Standard API)

-

May be provided either as user-level or kernel-level

-

A POSIX standard (IEEE 1003.1c) API for thread creation and sychronization

-

Specification, not implementation

-

API specifies behavior of the thread library, implementation is up to developers of the library

-

Common in UNIX operating systems (Linux & Mac OS X)

-

Examples refer to Slides

Implicit Threading

-

Growing in popularity as numbers of threads increase, program correctness more difficult with explicit threads

-

Creation and management of threads done by compilers and run-time libraries rather than programmers

-

Five methods explored

- ThreadPools

- Fork-Join

- OpenMP(http://www.openmp.org/)

- GrandCentralDispatch

- Intel Threading Building Blocks (TBB)

Thread Pools

- Create a number of threads in advance in a pool where they await work

- Advantages:

- Usually slightly faster to service a request with an existing thread than create a new thread

- Allows the number of threads in the application(s) to be bound to the size of the pool

- Separating task to be performed from mechanics of creating task allows different strategies for running task

- i.e. Tasks could be scheduled to run periodically

- Windows API supports thread pools

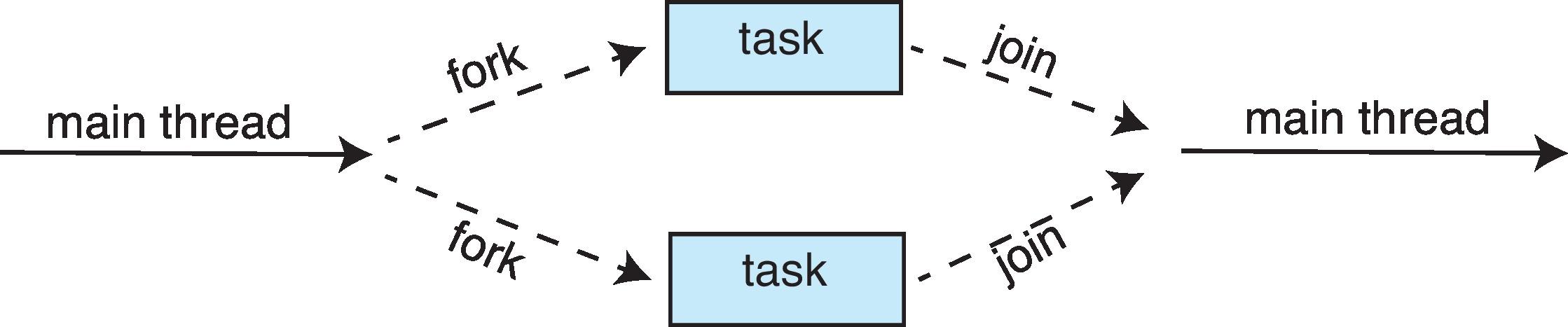

Fork-Join Parallelism

- Multiple threads (tasks) are forked, and then joined.

- Available in Java. (since Java SE7)

- Similar to Hadoop MapReduce operation

有点像 divide-and-conquer

OpenMP

- Set of compiler directives and an API for C, C++, FORTRAN

- Provides support for parallel programming in shared-memory environments

- Identifies parallel regions – blocks of code that can run in parallel

Thread-Local Storage

-

Thread-local storage (TLS) allows each thread to have its own copy of data

- Useful when you do not have control over the thread creation process (i.e., when using a thread pool)

- Different from local variables

- Local variables visible only during single function invocation

- TLS visible across function invocations

- Similar to static data

- TLS is unique to each thread

-

Linux declare a TLS variable:

__thread int number;

Thread Cancellation

- Terminating a thread before it has finished

- Thread to be canceled is target thread

- Two general approaches:

- Asynchronous cancellation terminates the target thread immediately

- Deferred cancellation allows the target thread to periodically check if it should be cancelled

- Two general approaches:

- Invoking thread cancellation requests cancellation, but actual cancellation depends on thread state

| Mode | State | Type |

|---|---|---|

| Off | Disabled | - |

| Deferred | Enabled | Deferred |

| Asynchronous | Enabled | Asynchronous |

-

If thread has cancellation disabled, cancellation remains pending until thread enables it

-

Default type is deferred

- Cancellation only occurs when thread reaches cancellation point

-

i.e.

pthread_testcancel() -

Then cleanup handler is invoked

-

- Cancellation only occurs when thread reaches cancellation point

-

On Linux systems, thread cancellation is handled through signals

Example: Windows Threads

- Windows API – primary API for Windows applications

- Implements the one-to-one mapping, kernel-level

- Each thread contains

- A thread id

- Register set representing state of processor

- Separate user and kernel stacks for when thread runs in user mode or kernel mode

- Private data storage area used by run-time libraries and dynamic link libraries (DLLs)

- The register set, stacks, and private data storage area are known as the context of the thread